2.9. Testing attacks against RobustBench models¶

In this tutorial, we will show how to correctly import RobustBench models inside SecML, and how to craft adversarial evasion attacks against them using SecML.

Warning

Requires installation of the pytorch extra dependency. See extra components for more information.

[1]:

%%capture --no-stderr --no-display

# NBVAL_IGNORE_OUTPUT

try:

import secml

import torch

except ImportError:

%pip install git+https://gitlab.com/secml/secml#egg=secml[pytorch]

We start by installing the models offered by RobustBench, a repository of pre-trained adversarially robust models, written in PyTorch. All the models are trained on CIFAR-10. To install the library, just open a terminal and execute the following command:

bash pip install git+https://github.com/RobustBench/robustbench.git@v0.1

[2]:

%%capture --no-stderr --no-display

# NBVAL_IGNORE_OUTPUT

try:

import robustbench

except ImportError:

%pip install git+https://github.com/RobustBench/robustbench.git@v0.1

After the installation, we can import the model we like among the one offered by the library (click here for the complete list):

[3]:

# NBVAL_IGNORE_OUTPUT

from robustbench.utils import load_model

from secml.utils import fm

from secml import settings

output_dir = fm.join(settings.SECML_MODELS_DIR, 'robustbench')

model = load_model(model_name='Carmon2019Unlabeled', norm='Linf', model_dir=output_dir)

This command will create a models directory inside the secml-data folder in your home directory, where it will download the desired model, specified by the model_name parameter. Since it is a PyTorch model, we can just load one sample from CIFAR-10 to test it.

[4]:

# NBVAL_IGNORE_OUTPUT

from secml.data.loader.c_dataloader_cifar import CDataLoaderCIFAR10

train_ds, test_ds = CDataLoaderCIFAR10().load()

[5]:

import torch

from secml.ml.features.normalization import CNormalizerMinMax

dataset_labels = ['airplane', 'automobile', 'bird', 'cat', 'deer', 'dog', 'frog', 'horse', 'ship', 'truck']

normalizer = CNormalizerMinMax().fit(train_ds.X)

pt = test_ds[0, :]

x0, y0 = pt.X, pt.Y

x0 = normalizer.transform(x0)

input_shape = (3, 32, 32)

x0_t = x0.tondarray().reshape(1, 3, 32, 32)

y_pred = model(torch.Tensor(x0_t))

print("Predicted classes: {0}".format(dataset_labels[y_pred.argmax(axis=1).item()]))

print("Real classes: {0}".format(dataset_labels[y0.item()]))

Predicted classes: cat

Real classes: cat

2.9.1. Load RobustBench models inside SecML¶

We can now import the pre-trained robust model inside SecML. Since these models are all coded in PyTorch, we just need to use the PyTorch wrapper of SecML.

In order to do this, we need to express the input_shape of the data, and feed the classifier with the flatten version of the array (under the hood, the framework will reconstruct the original shape):

[6]:

from secml.ml.classifiers import CClassifierPyTorch

secml_model = CClassifierPyTorch(model, input_shape=(3,32,32), pretrained=True)

y_pred = secml_model.predict(x0)

print("Predicted class: {0}".format(dataset_labels[y_pred.item()]))

Predicted class: cat

2.9.2. Computing evasion attacks¶

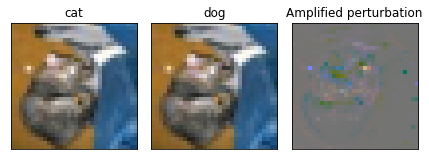

Now that we have imported the model inside SecML, we can compute attacks against it. We will use the iterative Projected Gradient Descent (PGD) attack, with l2 perturbation.

[7]:

from secml.adv.attacks.evasion import CAttackEvasionPGD

noise_type = 'l2' # Type of perturbation 'l1' or 'l2'

dmax = 0.5 # Maximum perturbation

lb, ub = 0, 1 # Bounds of the attack space. Can be set to `None` for unbounded

y_target = None # None if `error-generic` or a class label for `error-specific`

# Should be chosen depending on the optimization problem

solver_params = {

'eta': 0.4,

'max_iter': 100,

'eps': 1e-3

}

pgd_ls_attack = CAttackEvasionPGD(

classifier=secml_model,

double_init_ds=test_ds[0, :],

distance=noise_type,

dmax=dmax,

lb=lb, ub=ub,

solver_params=solver_params,

y_target=y_target

)

y_pred_pgd, _, adv_ds_pgd, _ = pgd_ls_attack.run(x0, y0)

print("Real class: {0}".format(dataset_labels[y0.item()]))

print("Predicted class after the attack: {0}".format(dataset_labels[y_pred_pgd.item()]))

Real class: cat

Predicted class after the attack: dog

[8]:

from secml.figure import CFigure

%matplotlib inline

img_normal = x0.tondarray().reshape((3,32,32)).transpose(2,1,0)

img_adv = adv_ds_pgd.X[0,:].tondarray().reshape((3,32,32)).transpose(2,1,0)

diff_img = img_normal - img_adv

diff_img -= diff_img.min()

diff_img /= diff_img.max()

fig = CFigure()

fig.subplot(1,3,1)

fig.sp.imshow(img_normal)

fig.sp.title('{0}'.format(dataset_labels[y0.item()]))

fig.sp.xticks([])

fig.sp.yticks([])

fig.subplot(1,3,2)

fig.sp.imshow(img_adv)

fig.sp.title('{0}'.format(dataset_labels[y_pred_pgd.item()]))

fig.sp.xticks([])

fig.sp.yticks([])

fig.subplot(1,3,3)

fig.sp.imshow(diff_img)

fig.sp.title('Amplified perturbation')

fig.sp.xticks([])

fig.sp.yticks([])

fig.tight_layout()

fig.show()